python采集B站某个用户的发表图文数据列表以及详情页数据到数据库代码

2024-07-17 13:20:22

41

编程爱好者之家为大家带来python采集B站某个用户的发表图文数据列表以及详情页数据到数据库代码

一:首先获取B站你要采集以用户的图文列表的数据地址

(PS:直接获取页面内容是获取不到的,数据在接口里面)

接口地址可通过浏览器 F12-->network 里面去查找 space 开头数据就是接口地址

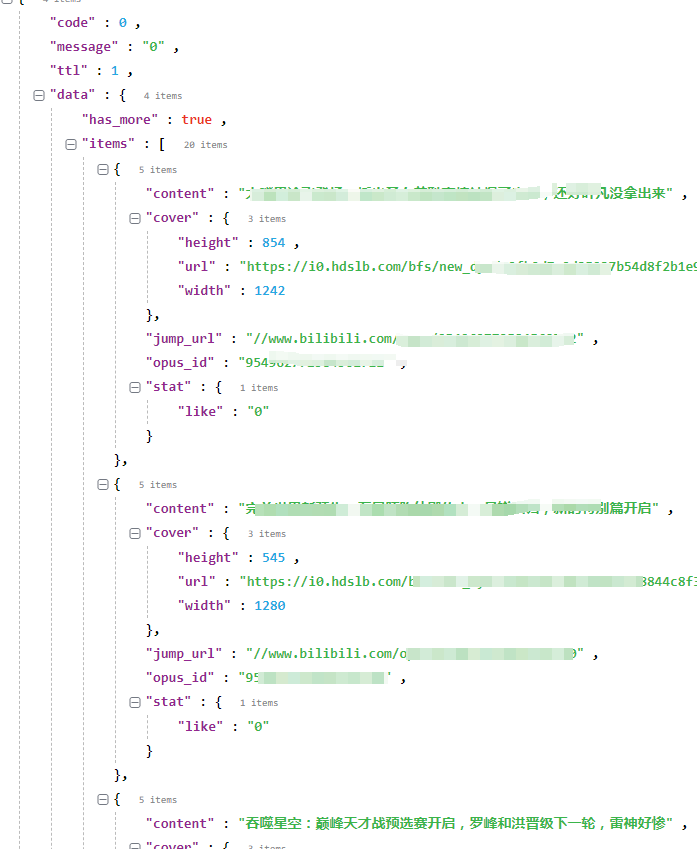

接口里面数据json格式化后如下:

二:具体代码如下:

代码包含列表页获取,详情页获取,远程图片下载在本地

import requests

import os

from bs4 import BeautifulSoup

from urllib.parse import urljoin

from urllib.request import urlretrieve

import datetime

import pymysql

import random

import re

import string

from pymysql import Connection

import time

import urllib.request

import re

from requests import RequestException

# _*_coding:utf-8_*_

import os

import datetime

import json

#按照日期创建文件夹方法

def create_folder(path):

# 年

year = datetime.datetime.now().strftime('%Y')

# 年月日

day = datetime.datetime.now().strftime('%Y%m%d')

foldername = path + "/" + year + "/" + day

folder = year + "/" + day

# 文件路径

word_name = os.path.exists(foldername)

# 判断文件是否存在:不存在创建

if not word_name:

os.makedirs(foldername)

return [foldername,folder]

#获取当前时间一个小时之后的随机时间戳

def get_random_next_hour_timestamp():

# 获取当前时间的时间戳

now = time.time()

# 随机生成小时、分钟和秒

random_hour = 1 # 因为我们要生成的是当前时间的下一个小时

random_minute = random.randint(0, 59)

random_second = random.randint(0, 59)

# 创建一个timedelta对象表示随机的小时

from datetime import timedelta

random_time_delta = timedelta(hours=random_hour, minutes=random_minute, seconds=random_second)

# 将随机时间加到当前时间上

random_time = now + (random_time_delta - timedelta(hours=1)).total_seconds()

# 将结果转换为时间戳

return int(random_time)

#下载图片方法

def download_images(image_list, output_folder,domain):

imgarr = []

newfolder = create_folder(output_folder)

for index, image_url in enumerate(image_list):

try:

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3",

}

response = requests.get(image_url,headers=headers)

try:

response.raise_for_status()

#生成日期随机名称

day = datetime.datetime.now().strftime('%Y%m%d%H%M%S')

random_number = random.randint(1000, 100000)

fname= str(day)+str(random_number)

file_name = str(fname) + '.jpg' # 使用数字索引作为文件名

file_path = os.path.join(newfolder[0], file_name)

with open(file_path, 'wb') as file:

file.write(response.content)

imgarr.append(str(domain) + str("/") + str(newfolder[1]) + str("/") + file_name)

except:

imgarr.append('https://www.codelovers.cn/default.jpg')

except requests.exceptions.RequestException as e:

print(f"Error downloading image at index {index}: {str(e)}")

return imgarr

#获取列表页详情标题,链接地址

def getList(url):

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3",

}

resp = requests.get(url,headers=headers)

resp = resp.json()

tags = resp['data']['items']

result = {}

datas = []

#链接数据库

conn = Connection(

host='localhost',

port=3306,

user='数据库用户名',

passwd='数据库密码',

autocommit=True

)

cursor = conn.cursor()

conn.select_db("数据库名")

for tag in tags:

result['url'] = 'https:'+tag['jump_url']

result['title'] = tag['content']

cursor.execute("SELECT id FROM article WHERE title = '%s'" % (result['title']))

res = cursor.fetchone()

if res:

continue

else:

infolist = getInfo(result['url'], '/home/uploads/')

if infolist:

#插入数据并更新url_link

dt = datetime.datetime.fromtimestamp(get_random_next_hour_timestamp())

nowtime = dt.strftime("%Y-%m-%d %H:%M:%S")

insertid = str(time.strftime('%Y%m%d%H')) + str(random.randint(1000, 9999))

cursor.execute("INSERT ignore INTO article (id,title,seotitle,fengmian,neirong,`jianjie`,addtime) VALUES (%s, %s,%s, %s, %s,%s,%s)", (insertid,infolist[0],infolist[0], infolist[2],infolist[1],infolist[0],nowtime))

upsql = "UPDATE blog_article SET isPublish=1 WHERE id = %s"

cursor.execute(upsql, insertid)

conn.commit()

conn.close()

datas.append(result)

return datas

#批量替换字符串

def replace_strings(text, replacements):

for old_str, new_str in replacements.items():

text = text.replace(old_str, new_str)

return text

def remove_img_tags_with_lazy_png_bs(html):

soup = BeautifulSoup(html, 'html.parser')

# 查找所有包含 "lazy.png" 的 img 标签

for img in soup.find_all("img", src=re.compile(r".*lazy\.png")):

img.decompose() # 删除找到的 img 标签

return str(soup)

#获取详情页内容函数

def getInfo(url,save_dir):

imgs = []

result = []

imgurl = []

headers = {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3",

}

resp = requests.get(url, headers=headers)

try:

soup = BeautifulSoup(resp.content, "html.parser")

content = soup.find('div', id='read-article-holder')

#处理文章中图片并下载到本地服务器

img=content.find_all("img")

if img:

for img_tag in img:

src = img_tag.get('data-src')

imgs.append('https:'+src)

imgurl = download_images(imgs, save_dir, 'https://www.codelovers.cn/uploads')

#替换原文图片地址

dic = {}

for index, img_tag in enumerate(img):

newsrc = img_tag.get('data-src')

dic[newsrc] = imgurl[index]

newcontent = replace_strings(str(content),dic)

else:

newcontent = str(content)

#文本替换 只保留P标签

newcontent = newcontent.replace("\n",'')

newcontent = newcontent.replace('<div class="normal-article-holder read-article-holder" id="read-article-holder">','')

newcontent = newcontent.replace("</div>",'')

newcontent = newcontent.replace('<figure class="img-box" contenteditable="false">','')

newcontent = newcontent.replace('</figure>','')

newcontent = newcontent.replace('data-src=','src=')

newcontent = newcontent.replace('<strong>','')

newcontent = newcontent.replace('</strong>','')

newcontent = newcontent.replace('<h1>','<h3>')

newcontent = newcontent.replace('</h1>','</h3>')

newcontent = newcontent.replace('<figcaption class="caption" contenteditable=""></figcaption>','')

result.append(soup.find('p', class_='inner-title').text)

result.append(newcontent)

if len(imgurl)!=0:

result.append(imgurl[0])

else:

result.append('https://www.codelovers.cn/default.jpg')

return result

except AttributeError:

return ''最后执行的时候只要执行 getList(接口地址)即可

同类文章

-

windows11安装Java8(jdk1.8)详细教程

-

linux系统安装python 3.12.0教程

-

python字符串requests获取数据怎么转换为字典

-

python采集B站某个用户的发表图文数据列表以及详情页数据到数据库代码

-

python采集微博某个用户的发表数据列表以及详情页数据到数据库

-

windows系统在cmd中执行 pip install numpy没反应解决办法

-

linux安装好python3后使用python命令提示-bash: python: command not found

-

python获取当前时间三个小时之后的随机时间戳

-

python删除网页中含有lazy.png字符串的img标签并返回删除后的字符串

-

windows 10 11系统安装Anaconda详细教程